On unethical Facebook experiments

A few thoughts on the recent furore regarding psychological experiments on Facebook users, where the subjects were not aware that they were being experimented on.

Should we ‘expect’ Facebook to behave ethically?

It’s worth noting that there are two subtly different definitions of the word expect: to expect something can be to consider it likely: “We expect to make a net gain from this event”, but also to consider it reasonable; to seek it with some (often moral) justification: “Attendees are expected to abide by the code of conduct.”

I agree that we should not consider it likely for Facebook to behave ethically. However I think we should hold Facebook up to a high moral standard. If we simply shrug, say “that’s just how it is,” and allow them to continue, then we deserve everything we get.

Now that that is out of the way:

Are these experiments surprising?

No—given Facebook’s track record, it is clear that they do not care about their users. Facebook’s history is full of episodes of neglecting to respect your privacy as a user: tracking you all over the web, constant assault on your ability to keep your information private, and mobile apps requiring a ridiculous level of access to your phone (such as getting your contact list, reading your SMS messages, or turning on your microphone or camera at any time without confirmation). There’s also the fact that algorithms rather than people often decide whether users have broken the community standards and should be blocked (these algorithms, needless to say, are hopelessly inadequate), and a completely unbalanced approach to removing inappropriate content (breastfeeding pictures are deemed unacceptable, while hate groups dedicated to praising murderers are not).

Does that make these revelations insignificant?

Of course not! Something about these experiments has upset a lot of people, regardless of whether it would have done had they all been behaving perfectly rationally. Reminder: behaving perfectly rationally is not something humans tend to do.

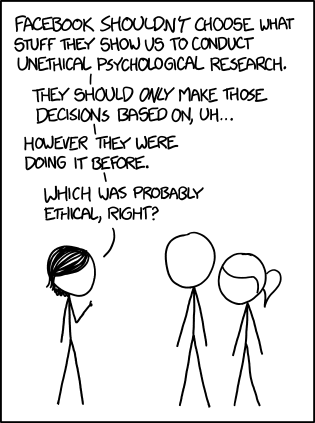

It’s true that now does seem an odd time to start being bothered about Facebook manipulating news feeds; the xkcd comic points out the cognitive inconsistency that many people have been guilty of (including me). But it’s far more interesting and useful to look at the issues that have been raised:

- What level of control over our emotions does Facebook have? What about other aspects of our behaviour? What about our ability to communicate with friends?

- What can we expect them to do, or not to do, with this control? Do we trust them with it?

- How do our priorities interact (or conflict) with theirs?

Here’s some potential situations to think about.

Facebook could very well make an informed estimate of the political views of large numbers of users based on the statuses they post, and the things they like and comment on. Combine this with the fact that a recent feature allows users to indicate to their friends that they have voted, in addition to showing users that have not yet voted where their nearest polling stations are, and that this feature, in the past, has increased turnout enough to sway an election. Suppose that Facebook had some interest in the outcome of an election. It seems very feasible for them to manipulate it.

Suppose, now, that some government has done a number of things that have greatly upset their electorate. Large protests are being planned, which makes this government uncomfortable. It certainly seems plausible that Facebook could identify people who are the most likely to persuade others to participate in protest action. Suppose this government approached Facebook and demanded that they manipulate these people. How could they do this? Might they boost posts that promote a sense of hopelessness? Might posts calling people to action be quietly tucked away by the mysterious and opaque news feed algorithm? Might accounts be banned based on spurious evidence?

I think it’s important to point out that regardless of whether Facebook might do these things, being in a position where it is possible for them to do so and for nobody to have a clue it is happening is not acceptable. We must not allow Facebook (or any other party) to have this level of control over our lives. Facebook is too important to be owned by Facebook.

Facebook (and all the other companies whose business model is corporate surveillance) tell us that we don’t have to use their services; if we don’t like the idea of having them mine our data, we can always use other options. Unfortunately this isn’t really the case: having an email address is more or less mandatory for modern life, and deactivating a Facebook account will limit your ability to keep in touch with your friends. It’s true that you can always switch email providers: however all the well-known ones (Gmail, Outlook.com, Yahoo, iCloud) are all pretty much the same with respect to corporate surveillance, and whenever you send an email to someone who uses one of these four, your data is still captured and mined. What proportion of your contacts do you think use one of these four services? 95%? 99%?

Final thoughts

It all looks rather bleak. Thankfully there are some very capable people working on this problem:

- Indie Phone’s goal is to empower people to own their own data. This necessitates a free (libre) and open source operating system for the phone, in addition to a server in the cloud to store your data on.

- sovereign is an executable blueprint of a personal cloud server, with email, file storage (like Dropbox), contacts and calendars sync, and more. Unfortunately, it is only suitable for experienced Linux users at the moment.

- Cloud Fleet looks like a similar idea to sovereign; unfortunately the website doesn’t give much away.

In the meantime here are some suggestions:

- Watch these talks: Digital Feudalism and How To Avoid It, and Free is a Lie

- Read these blog posts: Indie Data, and It’s the End of the Web As We Know It

- Switch to a free, open source operating system, like Ubuntu. I’ve been using Ubuntu as my main operating system for the last three years and Linux on the desktop is pretty solid nowadays.

- Go through your Facebook privacy settings and lock it down, especially for Platform and Ads. Better yet, deactivate your Facebook account.